As I write this, it’s been 10 days since Election Day.

It’s been quite an eventful post-election period so far, especially regarding the lightning-quick pace of Cabinet nominations announced by President-elect Donald Trump over the last several days. Whatever your thoughts on the election results and the Cabinet picks, there’s one thing that I think everyone can agree upon: Everything is going to change in Washington, D.C. very quickly. And because of that, much will change throughout the country, and even across the world.

Since our bailiwick at Colorado AI News is artificial intelligence, it feels necessary to address one question in particular: How much is AI regulation likely to change? Since the U.S. does not have a Secretary of Artificial Intelligence (at least, not yet), what can we surmise about how different AI regulation is likely to look under Trump than it has under Biden and Harris?

A few things come to mind, and deregulation would be at the top of the list.

It seems likely that we’re about to enter an era of unprecedented deregulation that will far outpace what President Reagan was able to do 40 years ago. It’s nearly certain that Trump will make bold moves in that direction, based on his many statements to that effect during the campaign, and looking at the guiding principles of many of his early Cabinet picks.

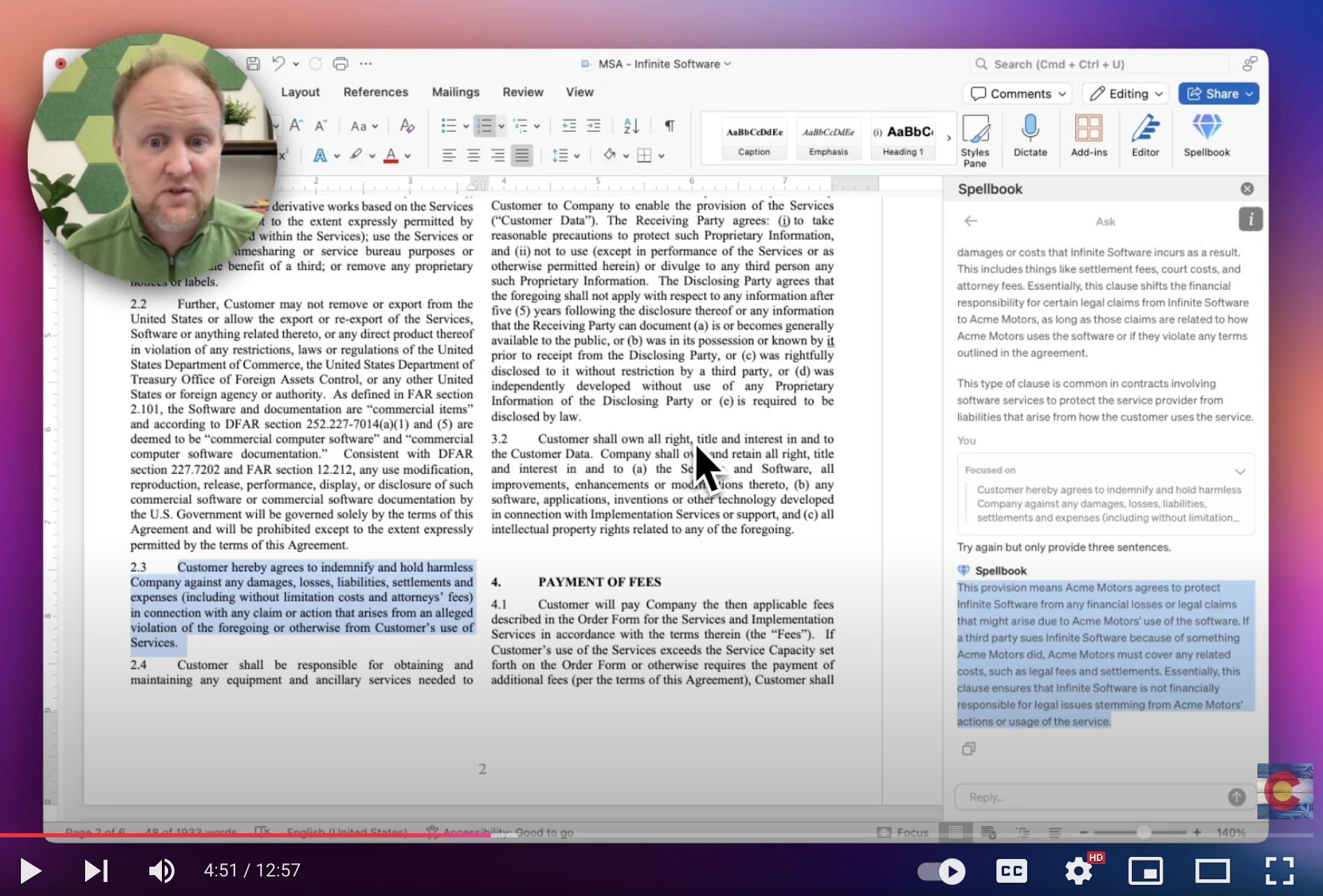

As I’ve indicated previously, I’m a big fan of The Artificial Intelligence Show podcast hosted by Paul Roetzer and Mike Kaput. There are many, many AI-related podcasts out there, but The AI Show is one of the best for a business-focused audience. In this week’s podcast, Roetzer and Kaput discussed various ways in which the new administration’s policies likely would affect AI. Deregulation of various kinds was the most common theme, but there also are some uncertainties out there that are worth paying attention to.

With that in mind, I thought Roetzer and Kaput’s picks for AI topics to watch would be a good starting point for a discussion here. Which is to say that although I want to give a hat-tip to The Artificial Intelligence Show’s hosts for raising these high-level points – and while their thoughts and mine frequently overlap – I am solely responsible for the analysis and opinions below.

1) Less of a Focus on AI Safety. President Biden created the AI Safety Institute (AISI) in October 2023 through an executive order that also addressed AI privacy, equity, civil rights, consumers’ rights, workers’ rights, and advancing AI leadership abroad. Virtually all sources agree that Trump is almost certain to get rid of the EO, as executive orders are easily dispensed with when a new President comes to town. In fact, on the campaign trail, Trump has clearly indicated that he would do just that with the AISI.

On the other hand, as Forbes points out, not all of Silicon Valley speaks in unison on this issue. And there is some thought that Elon Musk (see #2) might recommend keeping some parts of the EO, if only to possibly hamper those companies currently leading in AI (known as the Magnificent 7) and in that way to give Musk’s AI firm, xAI, a bit of a boost. In fact, Musk’s likely strong influence on this issue and others leads to him being awarded his own category of one to watch…

2) The Influence of Elon Musk. We’ve seen in the last month or so that given Musk’s more than $100 million in donations to the Trump campaign, he became the President-elect’s highest profile backer in the tech and AI industry, and arguably, the most significant backer, period. By now, in the 10 days since Election Day, we’ve seen that both Musk and former GOP presidential candidate Vivek Ramaswamy will have active roles in the Trump administration as co-leads of what Trump and Musk are calling the Department of Government Efficiency. (The acronym DOGE, of course, is a wink at Musk's favorite cryptocurrency, Dogecoin.)

What we don’t yet know is how Musk is likely to attempt to persuade Trump on a range of tech- and AI-related issues. Some analysts have suggested that Musk might actually push for strong regulation of the AI industry – or at least, of its largest players. As indicated above, while that may seem counter-intuitive, it could help Musk’s AI company, xAI, since it’s not one of the AI titans.

That said, Axios just reported that xAI’s latest influx of cash has doubled the privately held company’s valuation to $50 billion. While not exactly trillion-dollar territory (yet), that does suggest that Musk’s $100 million investment in Trump has already paid off handsomely. (Especially when one includes the fact that Tesla’s stock is up an astounding 25% since Election Day.)

3) The Rise of Silicon Valley Accelerationists. As we’ve discussed before, the Accelerationists in the AI world are those enthusiasts who believe that absolutely no regulations should be placed on the development of AI tools. Sometimes known as Effective Accelerationism (or e/acc), one of its most prominent supporters is the VC Marc Andreessen, who authored the Techno-Optimist Manifesto in October of 2023.

The uncompromising tone of the 5,000-word “Manifesto” perhaps can be best summed up by this line: “We believe any deceleration of AI will cost lives. Deaths that were preventable by the AI that was prevented from existing is a form of murder.” How closely the Accelerationists' insistence on no regulation of AI coincides with the views of President-elect Trump remains to be seen.

4) Uncertain Trump Tariffs. Trump has repeatedly promised tariffs of at least 10% on any imports to the U.S., while threatening that imports from China could see tariffs as high as 60% or even 100%. If Trump follows through with these promises, it likely would have severe ramifications for tech and AI industries in this country, not to mention for the entire economy.

As Fortune reported, “[T]ariffs could increase costs for hardware that is critical for AI, such as chips, many of which are manufactured abroad. They may also disrupt the supply chains of tech companies and put U.S. businesses at a competitive disadvantage to companies in Asia and Europe, due to higher component costs, retaliatory tariffs, or foreign firms that can undercut on price.” Although a large group of Silicon Valley founders supported Trump this year, it's unlikely that they – or Musk – would welcome such disruption in their supply chains.

5) Possible State Legislation. There is a good amount of uncertainty about how legislatures in states like Colorado, California, and Washington will react to a lessened federal focus on AI safety, privacy, ethics, and bias concerns. Although it’s possible that these states and others could step up their own efforts in these areas, industry pressure likely would work in the other direction so as not to drive AI companies to move to less-regulated states.

When Gov. Polis signed the Colorado AI Act into law last spring, it was with the knowledge that it wouldn’t actually be enacted until nearly two years later, in February of 2026. This gave the legislature a long runway to tweak it in ways large and small so as to find the delicate (impossible?) balance between too much and too little regulation. With such dramatic changes coming on the federal landscape, it will be interesting to see how the Colorado legislature and Polis react.

Speaking of things “interesting,” I’m reminded of the expression, “May you live in interesting times.” It’s typically been described as a traditional Chinese blessing, or, in its ironic sense, as an old Chinese curse. It actually appears to be English in origin, with the closest Chinese expression translating to, "Better to be a dog in times of tranquility than a human in times of chaos." However you choose to interpret it, I think it’s fair to say that all of us will be living in interesting times in the weeks, months, and years ahead.