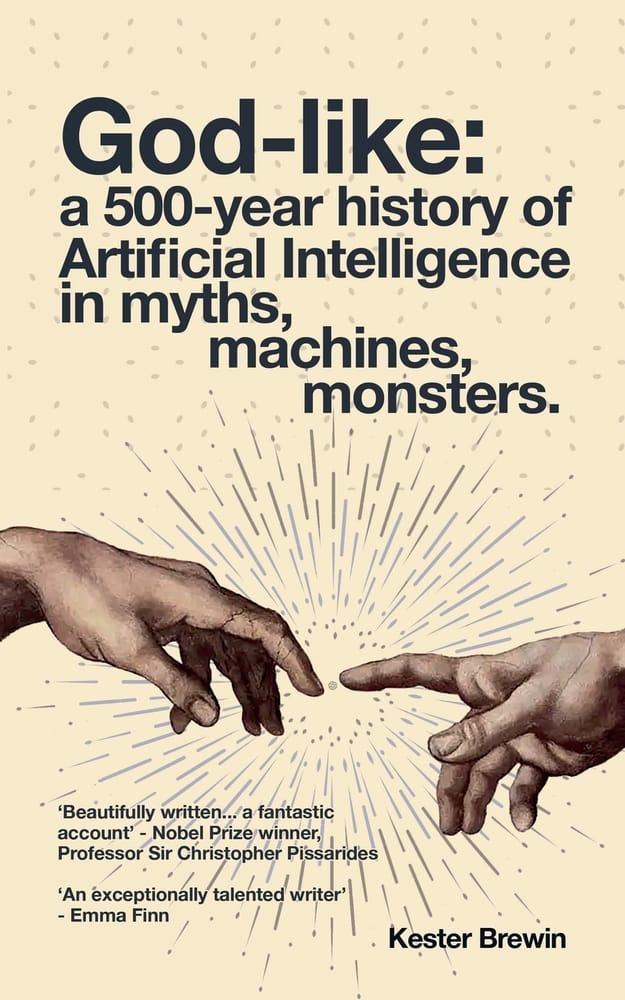

In God-like: A 500-year History of Artificial Intelligence in Myths, Machines, Monsters, published this spring, Kester Brewin connects a 16th-century monk who was burned at the stake for heresy, Michelangelo’s hidden messages in the Sistine Chapel, Christopher Marlowe’s depiction of Doctor Faustus selling his soul to the devil for unlimited knowledge, and Mary Shelley’s tale of scientific hubris in Frankenstein with ideas developed in the 20th-century that led to the invention of computers and the internet.

By tracing the contributions of key tech pioneers—including Vannevar Bush, Doug Engelbart, Claude Shannon, Robert Oppenheimer, John McCarthy, Marv Minsky, Norbert Wiener, Ken Kesey and others—Brewin shows how the emergence of artificial intelligence is the latest stage in humanity’s inexhaustible quest to augment human intelligence.

Brewin illustrates how both advocates and critics of AI echo aspirations and concerns regarding technology that have been articulated for centuries by philosophers, theologians, artists, and social commentators.

More specifically, God-like highlights how AI researchers frequently depict their own endeavors in theological terms. “God-like” is how Ian Hogarth, a British technologist, angel AI investor, and leader of the UK government’s AI taskforce, recently described AI in the Financial Times. Brewin opens with Hogarth’s description as a way to explain why this book “quite deliberately draws on theological ideas and the philosophy of religion.” He argues:

I believe that AI requires a theological reading because this is a technology that is so large in scope and vast in implication that we need to draw on areas of thought that have been forged in the struggle to express the inexpressible, using language forms that we have somewhat lost. (7)

However, Brewin adds this caveat:

I do not believe in God, nor do I believe that there is any transcendent creator or force at work in our universe. But what I do believe is that there is a strong reflex in humanity that keeps generating god-like systems and, despite the progress of science and reason and declines in people declaring adherence to religion, this shows no sign of weakening. (7-8)

Brewin asserts that “god-talk” provides a necessary vocabulary for articulating AI’s potential power, which is “particularly dangerous as it has been given the power of language, and if we do not have language of similar power to speak to one another about it and be vocal, active agents deciding what future we want to be building, we will quickly find that it is too late” (8).

The power of language underlies both the appeal and the potential danger of recent AI developments. Drawing upon Yuval Noah Harari’s assertion in Homo Deus that “Language is the operating system of human culture,” Brewin elaborates on how language distinguishes humans from other animals, pointing out that our language makes the very concept of time and the future possible. “A gazelle can alert other gazelles to a present and existing threat but, without sophisticated language, can it reflect on the future, can it strategize and plan? Can it hope?” (108)

Human language, in addition to enabling perception of time—memories of the past and anticipation of future events—Brewin notes, also creates our ontological discontent. “Birds do not appear to wrestle with the problem of being birds; they don’t seem to struggle to break free of bird-hood” (103). He elaborates,

For other animals, there appears to be a satisfaction in their ontology. They are enough. But for us, the force of creativity and curiosity seems connected not just to a sense of wonder at what might be possible to find, but a sense of incompleteness at our not having found everything yet. (104)

Ultimately, Brewin argues, technologies that once served religions have now transformed into the religion of technology, a theological rhetoric that glorifies knowledge and progress at all costs. In response, Brewin calls upon readers to reinvest in human social relations and creativity, not by rejecting technology, but by shaping it to serve, promote, and protect humanity.

The author’s interweaving of so many narrative threads is ambitious and fascinating, if at times chaotic. While much of this work derives from others’ analyses, God-like is a worthwhile and relatively short (just under 200 pages) read, packed with anecdotes, quotations, and theory.

Brewin proudly announces that he did not use generative AI to produce or edit any portion of this book, although it could have benefitted from the insights of skilled editor. Nonetheless, I find Brewin’s storytelling riveting. This is a book that I’ll return to many times. Anyone interested in human history, the development of technology, and the significance of emerging artificial intelligence should read and ponder the implications of God-like.