Generative AI (GenAI), a subfield of artificial intelligence, is improving at an incredilby rapid pace. The top providers of GenAI tools – such as Google, Microsoft, Anthropic, and Meta – are focusing on delivering ever-faster and better versions of their products, and they are fiercely competing with each other in an attempt to gain market share. Given that GenAI’s adoption rate is still relatively low, this a greenfield opportunity for these market leaders.

Meanwhile, other companies are scrambling to integrate GenAI-driven products into their solutions just as quickly. While the benefits of GenAI may be well-recognized, its implementation is challenged by complexity, ethical concerns, and a clear understanding of how GenAI may apply to a particular company’s products and services. Despite this, the market is rife with AI tools and AI-related buzzwords. In fact, it is rare to encounter a suggested solution without hearing “GenAI” or “AI” as if GenAI has been in use for a long time.

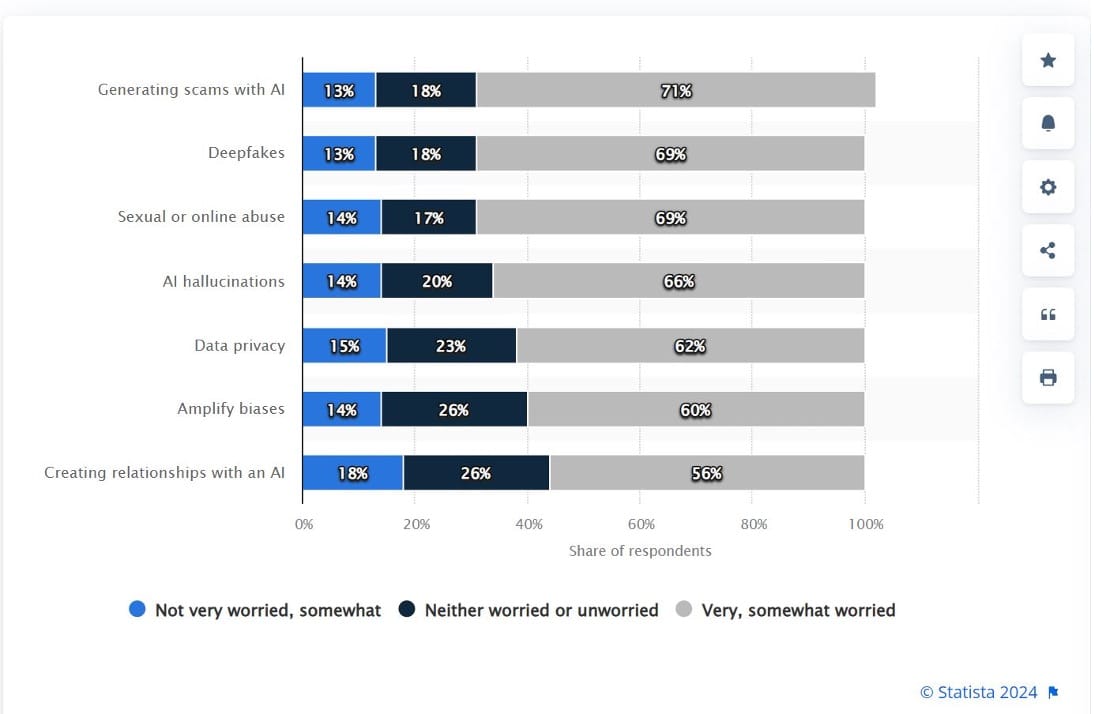

GenAI’s complexities and its opaque inner workings mean that its outputs cannot be easily explained, even by experts. This translates to low customer confidence and it’s a factor in its low adoption. A survey published by Statista earlier in 2024, and displayed below, shows substantial global concerns over the use of GenAI, with anywhere from 56% to 71% of respondents indicating that they were “very” or “somewhat” worried about issues ranging from hallucinations to deepfakes and scams. Forbes and other sources echo similar, fearful sentiments.

These concerns are further exacerbated by the “black box” nature of GenAI, as mentioned earlier, and they rise exponentially when human lives or livelihoods are involved. Without a proper understanding of GenAI’s output, customer confidence in GenAI-driven solutions dips as humans tend to trust systems they can comprehend and verify.

The high level of concern shared above is further confirmed by the annual reports of several companies. Both direct and indirect references to the lack of adoption of GenAI-driven products and services, as well as industry-wide discussions continue to underscore customer concerns and adoption challenges. Consider that in its 2024 annual report, NVIDIA noted that, despite growing adoption, there are ongoing reliability and ethical implications of GenAI solutions: “AI poses emerging legal, social, and ethical issues and presents risks and challenges that could affect its adoption, and therefore our business.”

Similarly, Google’s 2023 annual report, and Meta’s 2022 report refer to adoption issues related to AI-driven solutions. Google made references to data privacy and the potential misuse of AI, and Meta referred to user trust and the need to address ethical concerns to improve customer confidence in AI solutions.

In addition, should a company with GenAI-based solutions encounter legal or regulatory issues, it may struggle to defend itself because it can only refer to its training models and cannot precisely explain how the “black box” nature of GenAI derived the outcomes. Imagine that a patient was diagnosed with cancer based on a GenAI model trained on vast amounts of medical data. If the reasoning behind the AI’s output is unclear, both the oncologist and the patient may be skeptical of the conclusion.

What if AI hallucinations and biases in the training model were to lead to a misdiagnosis? If the diagnosis is challenged by the patient and is escalated to a legal issue, the company may struggle to defend itself or respond to regulatory challenges. Lack of transparency may cause customers to lose confidence in the company’s products and services.

So, how can companies build customer confidence and increase adoption? There is no single approach that can help accomplish that, although as companies gain experience with AI, and as customers get more accustomed to GenAI-based solutions, acceptance and adoption rate are likely to increase. However, companies can accelerate adoption through other proactive measures. These include, but are not limited to, implementing good governance, training skilled talent, and adhering to industry best practices in managing GenAI risks, in addition to addressing bias and ethical concerns. One approach that can give companies a healthy competitive advantage is Explainable AI.

Explainable AI (XAI)

In the cancer diagnosis example above, if the oncologist understood the reasoning behind the diagnosis, the combination of their expertise combined with the GenAI-based prediction may be more acceptable to the patient. In this way, the “black box” GenAI tool transforms into a helpful oncologist’s assistant. For a patient, the tool’s prediction may get perceived as a second opinion. The increased trust from the oncologist and the patient in the AI’s predictions made may lead to better adoption. EXplainable AI, or XAI, is one approach to bring transparency to AI models, allowing us to understand its output.

XAI, a subfield of Artificial Intelligence (AI) can not only provide decisions and predictions as part of its output, but it can also provide interpretable results and the reasoning behind those results.

“Interpretable results” address several major issues arising out of non-transparent AI:

- They allow a company to review the reasoning behind the results and to fine-tune its models for greater accuracy.

- The internal fine-tuning using XAI builds the confidence of the company’s stakeholders, and secures the legal department’s blessings.

- Product, marketing, and sales teams gain assurance in representing the solution to their clients.

- The company will be on solid ground to defend its models should any regulatory inquiries, legal issues, or public complaints arise.

In essence, XAI makes the company’s AI-driven solution trustworthy.

And yet, the benefits of XAI extend beyond trustworthiness. XAI is a great risk mitigation strategy, as the company knows that its model’s accuracy is validated. However, the company must continue to test and refine its models, as AI-characteristics can cause deviations from accuracy over time. And the company needs to stay abreast of changing rules and regulatory requirements to keep its AI-based products and services compliant.

In addition to mitigating risks, XAI provides tremendous competitive advantage to businesses. Consider the cancer diagnosis example. When comparing an AI-driven cancer diagnosis tool with XAI to a similar tool without XAI, both oncologists and patients are more likely to trust the XAI-based solution, even if the other model is much cheaper to use.

Or, consider another example, that of customers applying for home mortgage loans. A loan application may get rejected, or it may get approved but at a higher interest rate than the customer was envisioning. A bank using XAI can clearly explain the reasons behind those decisions, and the transparency provided by XAI can help explain any challenges from a customer who believes that they may have received unfair treatment possibly related to some type of discrimination.

With the XAI advantage, the product, marketing and sales departments can promote their offerings as XAI-driven. In today’s environment, where AI talent shortage is severe, XAI may be a powerful retention tool because employees appreciate working for a company that values purposeful products and services over mere profits.

XAI implementations are challenging. They are resource intensive, and it takes time and resources to provide reasoning while preserving the company’s secret sauce. Not surprisingly, this can lead to tradeoffs between performance and explainability, and with a slower performing model, a customer may be likely to switch to an alternate solution. Balancing performance and explainability requires companies to navigate these competing forces skillfully.

XAI is one aspect of AI bias and transparency. To learn more about these topics, and to get an overview of different XAI techniques, see my article, “Addressing AI Bias and Transparency.”

Conclusion

While GenAI offers tremendous potential, it also comes with significant risks. As a relatively new subfield of AI, regulatory requirements also are evolving as regulators develop a better understanding of GenAI’s capabilities and implications.

Concerns around bias, misinformation, privacy, and others persist. XAI that addresses aspects of AI bias and transparency can help mitigate some of these concerns. And because XAI provides the reasoning behind decision-making without disclosing how exactly the model works, it not only builds confidence, but it also can provide a competitive advantage to companies.