Who is ultimately responsible for ensuring the ethical use of AI?

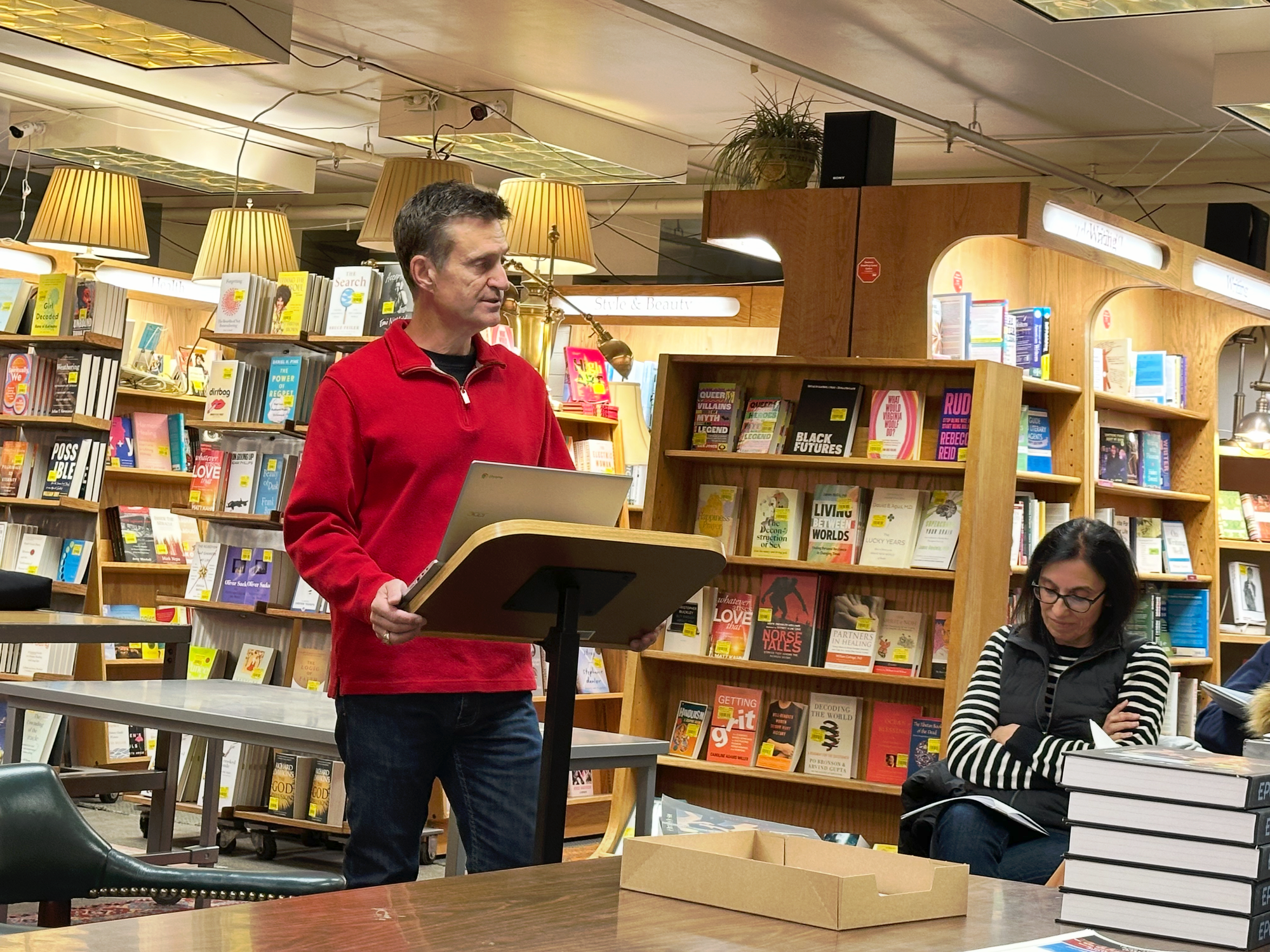

This is a question graduate students at the University of Colorado Boulder were asked to grapple with during the school’s annual Interdisciplinary Ethics Tech Competition earlier this month. All three of us on my team – two law students, Sydney Baker and Dillon Liu, and myself, a master's candidate in Computer Science – are incredibly honored to have won First Place at this year’s competition. In addition to providing an opportunity to work with students and professionals outside our regular field of study, the event posed serious questions about ethical problems in tech development that are worth considering in real-world contexts.

The competition featured nine participating teams, each comprised of three or four students who served as analysts for a fictitious consulting firm tasked with navigating the financial, legal, ethical, and technical aspects of a complex business decision: Should a Series D startup known for its strict ethical standards take a $2.4 billion contract to license their facial recognition and drone navigation technologies to the U.S. Department of Defense (DoD)?

We’ve seen this scenario in real life several times before. For example, six years ago, Google employees wrote a letter protesting Project Maven, a collaboration with a Pentagon pilot program that would use AI to improve the targeting of drone strikes. The more than 3,100 people who signed the letter encouraged Google to uphold the company motto Don’t Be Evil.

However, despite employee unwillingness to “build warfare technology,” it was soon determined that Amazon and Microsoft landed similar contracts after Google’s departure. It’s not just tech giants – much newer and smaller companies are also weighing their ethical policies against the lucrative incentives of defense contracting.

In January 2024, OpenAI removed their policy restriction on military applications of their products. This allowed the company to forge a new partnership with Anduril to develop counter-drone technology, a move that is was quite controversial. Entrepreneurs are starting to see value in the proposition, though, as plenty of startups have partnered with the DoD to do the same.

There’s obviously a lot of opportunity in defense contracting, so how could one turn down such a large funding opportunity? Aerovista, the case competition’s fictitious client, would gain unprecedented research capacity and financial stability for years to come. Their commitment to upholding such stringent moral standards could help them guide the DoD’s ethical principles, positioning them to someday influence international ethics, law, and regulation.

Defense contracting also doesn’t necessitate an end to Aerovista’s mission of developing technology for the public good, as plenty of military inventions are now used in the day-to-day lives of millions of civilians. But as OpenAI’s CEO Sam Altman warned during a Senate Judiciary oversight hearing in May 2023, “If this technology goes wrong, it can go quite wrong.”

To that point, if anything malfunctioned, Aerovista could be liable for wrongful death lawsuits, privacy breaches, or negligence. In fact, in 2023, family members of an Air Force special operations airman filed for a wrongful death lawsuit after a mechanical issue caused his aircraft to crash. Both the aircraft manufacturers, Boeing and Bell Textron, and the subcontractors providing metal for the aircraft's gearbox, were named in the suit. Even if a case didn’t make it all the way to court, Aerovista could still face steep attorney costs and irreparable reputational damage.

Partnership with the DoD would also significantly limit the company’s ability to expand internationally, largely due to the International Traffic in Arms Regulations (ITAR), which limits the export of defense articles. In addition to the hefty investment Aerovista would need to make in regulation and compliance protocols, the company would have to grapple with continuously evolving international AI regulation.

Making such a large change to their corporate values also brings the integrity of the company into question. Harvard Business Review says that “when employees sense that a leader’s decisions are at odds with company values, they are quick to conclude that the leader is a hypocrite.” Since Aerovista was founded on its leadership’s commitment to the public good, they should be careful not to alienate business partners, shareholders, and employees who trust their commitment to ethical development.

As a startup, Aerovista likely can’t afford the increased costs of high turnover – which includes recruiting, onboarding, and training new employees, coupled with diminished continuity – much less the potential loss of investor funding.

Considering both sides, what would you do if you were part of Aerovista’s leadership? How much money are your values worth?

After carefully weighing the consequences, my team offered a (surprisingly controversial!) recommendation: Aerovista should decline the contract. Though we were the only team to defend this position, our reasoning was that the company would have the best chance of success by establishing itself as a trusted partner in ethical tech long after the terms of the five-year DoD contract is up.

The decision to develop responsible tech is rarely this simple. In the real world, there’s no clear line between good and bad actors. Since the situation is often more nuanced than company leadership considering a single contract, the premise of the competition forces us to consider a fundamental question in AI ethics: Who’s ultimately responsible?

Ethical stewardship is not something that should be borne solely by a company’s strategic leadership, even though they certainly bear a significant share of that responsibility. It’s also more than just the employees who develop the technology or the shareholders who fund it. The responsibility to uphold the principles of ethical development is shared by all of us.

The conclusion of Project Maven’s letter reminds us that “we cannot outsource the moral responsibility of our technologies to third parties.” While this is true of large corporations such as Google, it is also true for startups, and for each of us as individuals.

Our decisions – what products we purchase, what services we subscribe to, what policies we support, and what companies we use – each contribute to the ethical landscape of AI. We cannot outsource these everyday moral decisions. We’re in an era where AI is more accessible than ever before, and what we choose to develop with this technology (or more importantly, what we choose to refrain from) will not only shape our innovation in tech, but also our society’s defining values.

--//--

The Interdisciplinary Ethics Tech Competition is co-hosted by the Daniels Fund Ethics Initiative Collegiate Program at Colorado Law, the Collegiate Program at the Leeds School of Business, and the Silicon Flatirons Center for Law, Technology, and Entrepreneurship, in partnership with CU Boulder's Department of Computer Science, Technology, Cybersecurity and Policy (TCP) Program, College of Engineering and Applied Science, and Department of Information Science.

Note that the opinions expressed in this article are solely those of the writer, and not necessarily those of her teammates, anyone else involved in the competition, or Colorado AI News.